I have compiled a new Intel Modular Server multipath driver for Citrix XenServer 6.1 kernel based on instructions on my earlier post:

Download the ready-made driver for XenServer 6.1 from here:

I have compiled a new Intel Modular Server multipath driver for Citrix XenServer 6.1 kernel based on instructions on my earlier post:

Download the ready-made driver for XenServer 6.1 from here:

Importing XenServer DDK 6.1 to XenServer 6.0.2 from XenCenter got stuck with message ‘Preparing to import vm’.

The workaround is to extract the DDK iso file to a NFS ISO storage. Attach that storage to your XenServer host and then import the file from there on the CLI:

xe vm-import filename=/var/run/sr-mount/a653a34e-ec0f-5134-906c-9077cafb8c9d/XS61DDK/ddk/ova.xml

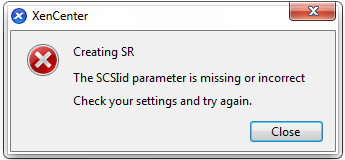

After completing a multipath setup with the updated storage driver you may see the following error when attaching a new hardware HBA in XenCenter. See the screenshot below:

This is caused by the command:

xe sr-probe type=lvmohba

To get around this you have to add the new SR from a CLI, example:

xe sr-create type=lvmohba content-type=user shared=true name-label="Name of SR" host-uuid=<uuid> device-config:device=/dev/disk/by-id/scsi-<id>

I had a situation today where my XenServer pool had an old NFS ISO Library attahced while the NFS share itself had been taken down long time ago on that specific IP address. After searching for different solutions I found out that the only working solution without a restart was to set up the exact same NFS share on the same IP address. Only then you can detach the NFS ISO SR.

After you have re-attached the nfs share on the same ip and same path you can detach it from XenCenter or follow these CLI commands:

Get the storage repository uuid:

xe sr-list name-label="Your SR name"

Get the uuid of pbd:

xe pbd-list sr=<UUID of SR>

Unplug all pbd-s by issuing:

xe pbd-unplug uuid=<UUID of PBD>

Forget the SR:

sr-forget uuid=<UUID of SR>

You can also try to use the –force option with any of the commands above.

Conclusion:

Managed to remove the NFS SR without a restart.

The defunctioning NFS storage repository was actually preventing any other SR related actions on my pool.

1. If your current pool master server is online, you need to connect to it by ssh.

Before changing the pool master you have to disable the HA feature temporarily.

xe pool-ha-disable

Next, get the list of your current servers in the pool.

xe host-list

Get the uuid of the slave you want to make the new master and type in the command:

xe pool-designate-new-master host-uuid=<new-master-uuid>

xe pool-ha-enable

2. If your pool master is already down for some reason, you can still make any slave as master of the pool.

Issue the following commands from the slave that will become the new master:

xe pool-emergency-transition-to-master xe pool-recover-slaves

Good luck with the new xen master 🙂

Intel Modular Server requires a manually built multipath driver in order to run a stable instance of XenServer 6.x.

Let’s build this driver for Citrix Xenserver 6.0.2E005 kernel from a RedHat driver available on Intel site. or a local copy on my site RHEL6_MPIO_Setup_20110712.

1. Download the DDK VM

Download XenServer 6.0.2 XS602E005 Driver Development Kit from http://support.citrix.com/article/CTX133814

2. Mount the downloaded iso file.

On Mac you can mount by double-clicking on the iso file.

3. Import the DDK VM

Open XenCenter and choose File -> Import.

Choose the ova.xml file from the mounted iso.

Choose a Home Server for the VM. The VM will require 512Mb of memory.

Choose Storage. The VM will require approximately 2Gb of space.

Do not set up networking at this step.

Click finish and wait until the upload completes.

4. Set up networking

Choose the new VM from XenCenter and click on the Networking tab.

Click Add Interface to enable your nic.

5. Start the new DDK VM.

Log in from the XenCenter console and set your root password.

If you do not have a dhcp server available, set up a static ip by editing /etc/sysconfig/network-scripts/ifcfg-eth0

You may also have to edit /etc/resolv.conf and add a nameserver.

Additionally, set up a gateway in /etc/sysconfig/network

6. Log in

If your network is set up correctly you can log in with ssh.

For convenience I also set up mc by running:

yum install mc --enablerepo=base

7. Download and unpack the RedHat driver to the VM

wget http://downloadmirror.intel.com/18617/eng/RHEL6_MPIO_Setup_20110712.zip unzip RHEL6_MPIO_Setup_20110712.zip

8. Install the source rpm

rpm -ivh --nomd5 scsi_dh_alua_CBVTrak-2-1.src.rpm cd /usr/src/redhat/SPECS rpmbuild -ba scsi_dh_alua.spec

Now, the new rpm is located at /usr/src/redhat/RPMS/i386/scsi_dh_alua_CBVTrak-2-1.i386.rpm

I have completed this whole process and here is the driver rpm with multipath.conf for download:

scsi_dh_alua_intelmodular_cbvtrak_xs602e005.i386.tar.gz

In fact this driver is an exact match to the driver compiled with the initial 6.0.2.542 kernel.

Here’s my earlier post on how to install this driver and get your Intel Modular Server running:

http://www.xenlens.com/citrix-xenserver-6-0-2-multipathing-support-intel-modular-server/

Sometimes it may happen that your vm has hanged and XenCenter controls(Reboot and Force Reboot) do not work.

You may see an error when you try to force a reboot (The operation could not be performed because a domain still exists for the specified VM.).

This is a process to reboot the hanged vm from the XenServer host console.

To get the uuid of the VM that is hung:

xe vm-list

Get the vm domain id by uuid:

list_domains

Then destroy the domain of the vm:

/opt/xensource/debug/destroy_domain -domid <domid>

Now you can force a reboot on your vm:

xe vm-reboot uuid=<uuid> --force

If it has no effect you can also try:

xe vm-reset-powerstate uuid=<uuid> --force

Note that it may take up to 3 minutes to complete.

Installing Fedora 17 requires a kickstart file which sets a recognizable grub config file for XenServer as well as speeds up your installation. Feel free to modify any parameters in the kickstart file.

Install should auto complete.

If you have an additional mezzanine card installed with your Compute Module and have a secondary Switch Module installed with your Intel Modular Server, you can set up network redundancy with nic bonding in Xenserver. Initially eth0 and eth1 are assigned to the main nic while eth2 and eth3 are available when you have the secondary mezzanine card installed in your Compute Module. Bonding for redundancy only makes sense if you bond interfaces from different nic-s. I would suggest bonding eth0 with eth2 and eth1 with eth3. You can set up bonds in XenCenter.

In bonding mode the system sets one of the bond slaves as active. Since both slaves appearantly have an active connection to either of the switches the system picks the active slave randomly. It only checks if the connection to the switch is active and not further. Since I currently have an active internet connected to only one of the switches, a startup script is needed to manually set active slave to the switch which is connected to the internet. In the future this script could actually be developed to set an active slave by checking the connection on each bond slave. Currently this is manual.

The startup script:

# cat /etc/init.d/ovs-set-active-slave

#! /bin/bash

#

# ovs-set-active-slave Set bond active slaves to eth0 on bond0 and eth1 on bond1

#

# chkconfig: 2345 14 90

# description: Set bond active slaves to eth0 on bond0 and eth1 on bond1

#

#

### BEGIN INIT INFO

# Provides: $ovs-set-active-slave

### END INIT INFO

start() {

/usr/bin/ovs-appctl bond/set-active-slave bond0 eth0

/usr/bin/ovs-appctl bond/set-active-slave bond1 eth1

}

status() {

:

}

stop() {

:

}

restart() {

stop

start

}

case "$1" in

start)

start

;;

stop)

stop

;;

status)

status

;;

restart)

restart

;;

*)

echo "Unknown action '$1'."

;;

esac

Activate script to run on server startup:

# chkconfig ovs-set-active-slave on

After reboot you should have eth0 as active slave on bond0 and eth2 as active slave on bond1:

# ovs-appctl bond/show bond0

bond_mode: active-backup lacp: off bond-detect-mode: carrier updelay: 31000 ms downdelay: 200 ms slave eth2: enabled slave eth0: enabled active slave

# ovs-appctl bond/show bond1

bond_mode: active-backup lacp: off bond-detect-mode: carrier updelay: 31000 ms downdelay: 200 ms slave eth1: enabled active slave slave eth3: enabled

Note: When the main switch fails you have to log in through Intel Modular Server KVM and set active slaves to the secondary switch manually.

Note2: If you do a Switch 1 reset from the Management Module then Xenserver will automatically change active slaves to the Switch 2. If you still want to use Switch 1 after the reset then you have to set active slaves back to Switch 1 manually.

Debian 7 Wheezy was installed by first installing Debian 6 Squeeze and then adding testing repo to /etc/apt/sources.list.

# cat sources.list # deb http://cdn.debian.net/debian/ squeeze main deb http://cdn.debian.net/debian/ testing main contrib non-free deb-src http://cdn.debian.net/debian/ testing main contrib non-free #deb http://cdn.debian.net/debian/ squeeze main #deb-src http://cdn.debian.net/debian/ squeeze main deb http://security.debian.org/ testing/updates main deb-src http://security.debian.org/ testing/updates main #deb http://security.debian.org/ squeeze/updates main #deb-src http://security.debian.org/ squeeze/updates main # squeeze-updates, previously known as 'volatile' deb http://cdn.debian.net/debian/ squeeze-updates main deb-src http://cdn.debian.net/debian/ squeeze-updates main

Before you do a dist-upgrade, make sure to install xe-tools.

Then do a dist upgrade:

apt-get update apt-get upgrade apt-get dist-upgrade

Before reboot to new 3.2.x kernel, make sure you add nobarrier option to each virtual disk in /etc/fstab or you may see errors.

Disks used:

xvda – Intel 320 SSD RAID1

xvdb – Seagate Savvio 10K.5 ST9900805SS SAS RAID1

Performance results:

# hdparm -tT /dev/xvda /dev/xvda: Timing cached reads: 14152 MB in 1.99 seconds = 7116.64 MB/sec Timing buffered disk reads: 1794 MB in 3.00 seconds = 597.91 MB/sec # hdparm -tT /dev/xvdb /dev/xvdb: Timing cached reads: 14210 MB in 1.99 seconds = 7145.46 MB/sec Timing buffered disk reads: 1834 MB in 3.00 seconds = 611.16 MB/sec

Buffered and cached disk reads with hdparm.

Seek time performance with python seeker.

Additional performance with fio. fio tutorial.

Tests were performed on a XenServer 6.0.2 guest Debian 6 Squeeze 64 bit.

There was no significant difference when enabling HDD Write Back Cache in IMS management module.

xvda – Intel 320 SSD RAID1

xvdb – Seagate Savvio 10K.5 ST9900805SS SAS RAID1

hdparm:

# hdparm -tT /dev/xvda /dev/xvda: Timing cached reads: 13578 MB in 1.99 seconds = 6824.44 MB/sec Timing buffered disk reads: 1422 MB in 3.00 seconds = 473.73 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdb: Timing cached reads: 13618 MB in 1.99 seconds = 6844.60 MB/sec Timing buffered disk reads: 1322 MB in 3.00 seconds = 440.17 MB/sec

seeker.py:

# ./seeker.py /dev/xvda Benchmarking /dev/xvda [10.00 GB] 10/0.00 = 3310 seeks/second 0.30 ms random access time 100/0.02 = 5053 seeks/second 0.20 ms random access time 1000/0.18 = 5635 seeks/second 0.18 ms random access time 10000/1.82 = 5498 seeks/second 0.18 ms random access time 100000/17.14 = 5834 seeks/second 0.17 ms random access time

# ./seeker.py /dev/xvdb Benchmarking /dev/xvdb [20.00 GB] 10/0.00 = 2529 seeks/second 0.40 ms random access time 100/0.01 = 8396 seeks/second 0.12 ms random access time 1000/0.13 = 7611 seeks/second 0.13 ms random access time 10000/1.16 = 8620 seeks/second 0.12 ms random access time 100000/11.25 = 8890 seeks/second 0.11 ms random access time

fio:

# cat random-read-test-xvda.fio [random-read] rw=randread size=128m directory=/tmp

# fio random-read-test-xvda.fio

random-read: (g=0): rw=randread, bs=4K-4K/4K-4K, ioengine=sync, iodepth=1

Starting 1 process

random-read: Laying out IO file(s) (1 file(s) / 128MB)

Jobs: 1 (f=1): [r] [100.0% done] [7000K/0K /s] [1709/0 iops] [eta 00m:00s]

random-read: (groupid=0, jobs=1): err= 0: pid=11333

read : io=131072KB, bw=7277KB/s, iops=1819, runt= 18012msec

clat (usec): min=6, max=6821, avg=541.36, stdev=112.69

bw (KB/s) : min= 6552, max=10144, per=100.18%, avg=7289.37, stdev=840.90

cpu : usr=0.44%, sys=6.86%, ctx=32882, majf=0, minf=24

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued r/w: total=32768/0, short=0/0

lat (usec): 10=0.01%, 250=0.01%, 500=17.30%, 750=81.96%, 1000=0.66%

lat (msec): 2=0.05%, 4=0.01%, 10=0.01%

Run status group 0 (all jobs):

READ: io=131072KB, aggrb=7276KB/s, minb=7451KB/s, maxb=7451KB/s, mint=18012msec, maxt=18012msec

Disk stats (read/write):

xvda: ios=32561/3, merge=0/7, ticks=17340/0, in_queue=17340, util=96.44%

# cat random-read-test-xvdb.fio [random-read] rw=randread size=128m directory=/home

# fio random-read-test-xvdb.fio

random-read: (g=0): rw=randread, bs=4K-4K/4K-4K, ioengine=sync, iodepth=1

Starting 1 process

random-read: Laying out IO file(s) (1 file(s) / 128MB)

Jobs: 1 (f=1): [r] [100.0% done] [6688K/0K /s] [1633/0 iops] [eta 00m:00s]

random-read: (groupid=0, jobs=1): err= 0: pid=11525

read : io=131072KB, bw=7050KB/s, iops=1762, runt= 18592msec

clat (usec): min=252, max=36782, avg=558.96, stdev=289.86

bw (KB/s) : min= 5832, max= 9416, per=100.13%, avg=7057.95, stdev=733.93

cpu : usr=0.90%, sys=6.39%, ctx=32905, majf=0, minf=24

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued r/w: total=32768/0, short=0/0

lat (usec): 500=19.77%, 750=79.20%, 1000=0.79%

lat (msec): 2=0.19%, 4=0.02%, 10=0.02%, 20=0.01%, 50=0.01%

Run status group 0 (all jobs):

READ: io=131072KB, aggrb=7049KB/s, minb=7219KB/s, maxb=7219KB/s, mint=18592msec, maxt=18592msec

Disk stats (read/write):

xvdb: ios=32447/38, merge=0/4, ticks=17836/1012, in_queue=18848, util=96.56%

# cat random-read-test-aio-xvda.fio [random-read] rw=randread size=128m directory=/tmp ioengine=libaio iodepth=8 direct=1 invalidate=1

# fio random-read-test-aio-xvda.fio

random-read: (g=0): rw=randread, bs=4K-4K/4K-4K, ioengine=libaio, iodepth=8

Starting 1 process

Jobs: 1 (f=1): [r] [100.0% done] [33992K/0K /s] [8299/0 iops] [eta 00m:00s]

random-read: (groupid=0, jobs=1): err= 0: pid=12384

read : io=131072KB, bw=37567KB/s, iops=9391, runt= 3489msec

slat (usec): min=5, max=65, avg= 9.79, stdev= 1.79

clat (usec): min=427, max=42452, avg=833.25, stdev=714.35

bw (KB/s) : min=31520, max=42024, per=101.20%, avg=38017.33, stdev=4144.75

cpu : usr=6.19%, sys=12.39%, ctx=29265, majf=0, minf=32

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=100.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.1%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued r/w: total=32768/0, short=0/0

lat (usec): 500=2.48%, 750=42.14%, 1000=38.11%

lat (msec): 2=16.97%, 4=0.19%, 10=0.08%, 50=0.02%

Run status group 0 (all jobs):

READ: io=131072KB, aggrb=37567KB/s, minb=38468KB/s, maxb=38468KB/s, mint=3489msec, maxt=3489msec

Disk stats (read/write):

xvda: ios=32017/0, merge=5/6, ticks=26760/0, in_queue=28344, util=96.71%

# cat random-read-test-aio-xvdb.fio [random-read] rw=randread size=128m directory=/home ioengine=libaio iodepth=8 direct=1 invalidate=1

# fio random-read-test-aio-xvdb.fio

random-read: (g=0): rw=randread, bs=4K-4K/4K-4K, ioengine=libaio, iodepth=8

Starting 1 process

Jobs: 1 (f=1): [r] [75.0% done] [43073K/0K /s] [11K/0 iops] [eta 00m:01s]

random-read: (groupid=0, jobs=1): err= 0: pid=12464

read : io=131072KB, bw=41783KB/s, iops=10445, runt= 3137msec

slat (usec): min=7, max=409, avg=11.79, stdev= 3.66

clat (usec): min=274, max=1464, avg=745.43, stdev=141.13

bw (KB/s) : min=40416, max=42096, per=99.99%, avg=41777.33, stdev=668.21

cpu : usr=1.40%, sys=21.94%, ctx=27542, majf=0, minf=32

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=100.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.1%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued r/w: total=32768/0, short=0/0

lat (usec): 500=2.23%, 750=49.39%, 1000=46.19%

lat (msec): 2=2.19%

Run status group 0 (all jobs):

READ: io=131072KB, aggrb=41782KB/s, minb=42785KB/s, maxb=42785KB/s, mint=3137msec, maxt=3137msec

Disk stats (read/write):

xvdb: ios=30272/0, merge=0/0, ticks=22772/0, in_queue=22772, util=96.17%

SSD performance should be much better than SAS but is not outperforming it.

I guess this might be a controller limitation.

Please comment about your experience with IMS disk performance.

Install the pluginstall script:

npm install -g pluginstall

Check if it was correctly installed:

npm ls -g | grep pluginstall

Get all Cordova plugins:

git clone git://github.com/alunny/phonegap-plugins.git

Go to the folder just created by git:

cd phonegap-plugins

Go to crossplatform:

git checkout crossplatform

Go to ChildBrowser plugin path:

cd CrossPlatform/ChildBrowser/

Go to your ios app folder and then execute:

pluginstall ios . ~/screencast/phonegap-plugins/CrossPlatform/ChildBrowser/

Add js file to your index.html file:

<script src="childbrowser.js" type="text/javascript" charset="utf-8"></script>

First, make a new template on your Dom0 xen host:

xe template-list name-label="Ubuntu Lucid Lynx 10.04 (64-bit)" --minimal xe vm-clone uuid=[UUID from step 1 here] new-name-label="Ubuntu Precise Pangolin 12.04 (64-bit)" xe template-param-set other-config:default_template=true other-config:debian-release=precise uuid=[UUID from step 2 here]

Create a new VM in XenCenter and choose the VM template you just created

Enter the following as install URL:

http://archive.ubuntu.net/ubuntu/

During Ubuntu Server install create a separate /boot partition with the size of 500Mb and set the type to ext3 as pygrub does not support ext4 very well.

After installation is complete, log in and make yourself root:

sudo -s

Next, install kernel with virtual support and remove default kernel:

apt-get install linux-virtual linux-image-virtual linux-headers-virtual dpkg -l | grep generic apt-get remove [all linux*generic packages from last command]

Update grub:

update-grub

Mount xs-tools to your VM dvd drive in XenCenter and install xen guest tools for VM monitoring:

mount /dev/xvdd /mnt cd /mnt/Linux dpkg -i *amd64.deb

Reboot:

reboot

Edit /etc/fstab and add nobarrier option to every virtual disk, example /etc/fstab:

# /etc/fstab: static file system information. # # Use 'blkid' to print the universally unique identifier for a # device; this may be used with UUID= as a more robust way to name devices # that works even if disks are added and removed. See fstab(5). # # proc /proc proc nodev,noexec,nosuid 0 0 # / was on /dev/xvda5 during installation UUID=a4588f98-9db1-4e85-adf4-e03a6c5202d4 / ext3 nobarrier 0 1 # /boot was on /dev/xvda1 during installation UUID=12c79a36-e3b8-49c4-8d20-5d8c302c7faf /boot ext3 nobarrier 0 2 # /home was on /dev/xvdb1 during installation UUID=7558d15d-8c4b-4d50-8872-72fd2974c946 /home ext3 nobarrier 0 2 # swap was on /dev/xvdb5 during installation UUID=cb9aef04-d7ca-4eb9-940c-87d986729169 none swap sw 0 0

Reboot:

reboot

That should do it, have fun with Ubuntu Server 12.04.

1. Prerequisites

First of all, create at least one Storage Repository (SR) and one Virtual Disk (VD) in the Intel Management Module GUI where you will install the Citrix Xenserver Dom0 host. The VD needs to be more than 16Gb in size. Whether you install the host on sas or ssd makes no difference in performance for the guests. I installed my hosts to sas and saved valuable ssd space for guests. Assign your VD to the server you will install Xen to. Set affinity to SCM1 for all VD-s you create. Open the KVM session for the appropriate Compute Module and attach Xenserver installation iso. Power on the Compute Module.

2. Install Citrix Xenserver 6.0.2

Type ‘multipath’ on initial install prompt to begin installation with multipath support.

Choose the VD you just assigned to the Compute Module as installation target.

After installation is complete, you may see disk access errors during bootup, this is normal.

3. Install patched driver and change multipath.conf file

Original driver included with XenServer 6.0.2 is unstable wth multipathing.

I compiled a stable driver rpm with Citrix XenServer Driver Development Kit (DDK) from RedHat driver available on Intel site.

Note that this has been compiled against 6.0.2 and you may need to recompile if you use a more recent XenServer version.

Download the driver and multipath.conf file: scsi_dh_alua_intelmodular_cbvtrak.i386.tar.gz

Unpack the file:

tar -zxvf scsi_dh_alua_intelmodular_cbvtrak.i386.tar.gz

Install scsi_dh_alua driver rpm patched for Intel Modular Server.

The driver already exists so we have to force in order to replace it.

rpm -ivh --force scsi_dh_alua_intelmodular_cbvtrak.i386.rpm

Backup your current /etc/multipath.conf to /etc/multipath.conf.originalxs602:

cp /etc/multipath.conf /etc/multipath.conf.originalxs602

Move the multipath.conf file from the unpacked tar to the /etc folder:

mv multipath.conf /etc/multipath.conf

Warning: do not copy and paste the file contents as it can make the config file corrupt and thus failing server multipath setup.

Reboot

You may still see disk access errors during bootup, this is normal.

At this point you should already see both paths active, see my sample:

multipath -ll

Response:

2223700015563b1df dm-0 Intel,Multi-Flex [size=16G][features=1 queue_if_no_path][hwhandler=0][rw] \_ round-robin 0 [prio=50][active] \_ 0:0:0:0 sda 8:0 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 0:0:1:0 sdb 8:16 [active][ready]

4. Make a new initial ramdisk

Backup your current initrd.img and initrd.kdump

mv initrd-$(uname -r).img initrd-$(uname -r).img.old mv initrd-2.6.32.12-0.7.1.xs6.0.2.542.170665kdump.img initrd-2.6.32.12-0.7.1.xs6.0.2.542.170665kdump.img.old

Make new initial ramdisk by running both mkinitrd commands found in

cat initrd-$(uname -r).img.cmd cat initrd-2.6.32.12-0.7.1.xs6.0.2.542.170665kdump.img.cmd

Reboot

You should see no more errors during bootup

Check your paths

multipath -ll

With one VD attached you shold see something like this:

22202000155fdb615 dm-0 Intel,Multi-Flex [size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw] \_ round-robin 0 [prio=50][active] \_ 0:0:0:0 sda 8:0 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 0:0:1:0 sdb 8:16 [active][ready]

As you can see, sda is in use (active) and sdb is on standby (enabled).

4. Create and link other VD-s to the Compute Module

From Intel Intel GUI link VD-s to Compute Module.

In XenCenter right click on Pool – New SR – Hardware HBA.

Check your paths:

multipath -ll

Here is a sample response from my setup:

22202000155fdb615 dm-0 Intel,Multi-Flex [size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw] \_ round-robin 0 [prio=50][active] \_ 0:0:2:0 sda 8:0 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 0:0:1:0 sdc 8:32 [active][ready] 222ac00015543fe63 dm-5 Intel,Multi-Flex [size=420G][features=1 queue_if_no_path][hwhandler=1 alua][rw] \_ round-robin 0 [prio=50][enabled] \_ 0:0:2:10 sde 8:64 [active][ready] \_ round-robin 0 [prio=1][enabled] \_ 0:0:1:10 sdd 8:48 [active][ready]

5. Modify your lvm.conf to filter out /dev/sd* when running commands: pvs, vgs, lvs

Find the line starting with ‘filter’ in /etc/lvm/lvm.conf

Edit it as follows:

filter = [ "r|/dev/sd.*|", "r|/dev/xvd.|", "r|/dev/VG_Xen.*/*|"]

6. Updating, patching, hotfixes

Running hotfix XS602E004 is safe (kernel is not updated).

Running hotfix XS602E005 is safe as update process will rebuild your initrd as well.

7. Failover testing on Intel Modular Server with multipathing

See my other post with successful filover tests with this configuration

Both Storage Controller Modules attached and Intel Modular Server running on Citrix Xenserver 6.0.2 with patched dh_scsi_alua driver.

See my other blog post on how to set up stable multipathing on IMS with Citrix Xenserver 6.0.2

Initial multipath state:

[root@xenserver1 ~]# multipath -ll

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:0:0 sda 8:0 [active][ready]

\_ round-robin 0 [prio=1][enabled]

\_ 0:0:1:0 sdb 8:16 [active][ready]

Note that I waited around 5 minutes after each SCM pull out / push back in order to let the system stabilize.

I did not reboot the host between these events.

Messages:

Apr 7 17:26:35 xenserver1 kernel: sd 0:0:1:0: [sdb] Synchronizing SCSI cache

Apr 7 17:26:35 xenserver1 kernel: sd 0:0:1:0: [sdb] Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Apr 7 17:26:35 xenserver1 kernel: mptsas: ioc0: delete expander: num_phys 25, sas_addr (0x5001e671d12253ff)

Apr 7 17:26:35 xenserver1 kernel: mptbase: ioc0: LogInfo(0x31140000): Originator={PL}, Code={IO Executed}, SubCode(0x0000)

Apr 7 17:26:35 xenserver1 kernel: mptscsih: ioc0: ERROR – Received a mf that was already freed

Apr 7 17:26:35 xenserver1 kernel: mptscsih: ioc0: ERROR – req_idx=beaf req_idx_MR=43 mf=ec984980 mr=ec9825d0 sc=(null)

Apr 7 17:26:35 xenserver1 multipathd: sdb: remove path (operator)

Apr 7 17:26:35 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 1 1 round-robin 0 1 1 8:0 100]

Apr 7 17:26:35 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 17:26:42 xenserver1 kernel: sd 0:0:0:0: alua: port group 00 state A supports touSnA

Multipath state:

[root@xenserver1 ~]# multipath -ll

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:0:0 sda 8:0 [active][ready]

Messages:

Apr 7 17:34:26 xenserver1 kernel: mptsas: ioc0: add expander: num_phys 25, sas_addr (0x5001e671d12253ff)

Apr 7 17:34:27 xenserver1 kernel: mptsas: ioc0: attaching ssp device: fw_channel 0, fw_id 1, phy 11, sas_addr 0x500015500002050a

Apr 7 17:34:27 xenserver1 kernel: target0:0:2: mptsas: ioc0: add device: fw_channel 0, fw_id 1, phy 11, sas_addr 0x500015500002050a

Apr 7 17:34:27 xenserver1 kernel: scsi 0:0:2:0: Direct-Access Intel Multi-Flex 0308 PQ: 0 ANSI: 5

Apr 7 17:34:27 xenserver1 kernel: scsi 0:0:2:0: mptscsih: ioc0: qdepth=64, tagged=1, simple=1, ordered=0, scsi_level=6, cmd_que=1

Apr 7 17:34:27 xenserver1 kernel: scsi 0:0:2:0: alua: supports explicit TPGS

Apr 7 17:34:27 xenserver1 kernel: scsi 0:0:2:0: alua: port group 01 rel port 06

Apr 7 17:34:27 xenserver1 kernel: scsi 0:0:2:0: alua: port group 01 state S supports touSnA

Apr 7 17:34:27 xenserver1 kernel: sd 0:0:2:0: Attached scsi generic sg1 type 0

Apr 7 17:34:27 xenserver1 kernel: sd 0:0:2:0: [sdb] 209715200 512-byte logical blocks: (107 GB/100 GiB)

Apr 7 17:34:27 xenserver1 kernel: sd 0:0:2:0: [sdb] Write Protect is off

Apr 7 17:34:27 xenserver1 kernel: sd 0:0:2:0: [sdb] Write cache: enabled, read cache: enabled, supports DPO and FUA

Apr 7 17:34:27 xenserver1 kernel: sdb:

Apr 7 17:34:27 xenserver1 kernel: ldm_validate_partition_table(): Disk read failed.

Apr 7 17:34:27 xenserver1 kernel: unable to read partition table

Apr 7 17:34:27 xenserver1 kernel: sd 0:0:2:0: [sdb] Attached SCSI disk

Apr 7 17:34:29 xenserver1 multipathd: sdb: add path (operator)

Apr 7 17:34:29 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 2 1 round-robin 0 1 1 8:0 100 round-robin 0 1 1

Apr 7 17:34:29 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 17:34:43 xenserver1 kernel: sd 0:0:0:0: alua: port group 00 state A supports touSnA

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:0:0 sda 8:0 [active][ready]

\_ round-robin 0 [prio=1][enabled]

\_ 0:0:2:0 sdb 8:16 [active][ready]

Messages:

Apr 7 17:39:13 xenserver1 kernel: sd 0:0:2:0: [sdb] Synchronizing SCSI cache

Apr 7 17:39:13 xenserver1 kernel: sd 0:0:2:0: [sdb] Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Apr 7 17:39:13 xenserver1 kernel: mptsas: ioc0: delete expander: num_phys 25, sas_addr (0x5001e671d12253ff)

Apr 7 17:39:13 xenserver1 multipathd: sdb: remove path (operator)

Apr 7 17:39:13 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 1 1 round-robin 0 1 1 8:0 100]

Apr 7 17:39:13 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 17:39:14 xenserver1 kernel: mptbase: ioc0: LogInfo(0x31140000): Originator={PL}, Code={IO Executed}, SubCode(0x0000)

Apr 7 17:39:14 xenserver1 kernel: mptscsih: ioc0: ERROR – Received a mf that was already freed

Apr 7 17:39:14 xenserver1 kernel: mptscsih: ioc0: ERROR – req_idx=beaf req_idx_MR=d2 mf=ec989100 mr=ec9822b0 sc=(null)

Apr 7 17:39:16 xenserver1 kernel: sd 0:0:0:0: alua: port group 00 state A supports touSnA

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:0:0 sda 8:0 [active][ready]

Messages:

Apr 7 17:47:36 xenserver1 kernel: mptsas: ioc0: add expander: num_phys 25, sas_addr (0x5001e671d12253ff)

Apr 7 17:47:37 xenserver1 kernel: mptsas: ioc0: attaching ssp device: fw_channel 0, fw_id 1, phy 11, sas_addr 0x500015500002050a

Apr 7 17:47:37 xenserver1 kernel: target0:0:3: mptsas: ioc0: add device: fw_channel 0, fw_id 1, phy 11, sas_addr 0x500015500002050a

Apr 7 17:47:37 xenserver1 kernel: scsi 0:0:3:0: Direct-Access Intel Multi-Flex 0308 PQ: 0 ANSI: 5

Apr 7 17:47:37 xenserver1 kernel: scsi 0:0:3:0: mptscsih: ioc0: qdepth=64, tagged=1, simple=1, ordered=0, scsi_level=6, cmd_que=1

Apr 7 17:47:37 xenserver1 kernel: scsi 0:0:3:0: alua: supports explicit TPGS

Apr 7 17:47:37 xenserver1 kernel: scsi 0:0:3:0: alua: port group 01 rel port 06

Apr 7 17:47:37 xenserver1 kernel: scsi 0:0:3:0: alua: port group 01 state S supports touSnA

Apr 7 17:47:37 xenserver1 kernel: sd 0:0:3:0: Attached scsi generic sg1 type 0

Apr 7 17:47:37 xenserver1 kernel: sd 0:0:3:0: [sdb] 209715200 512-byte logical blocks: (107 GB/100 GiB)

Apr 7 17:47:37 xenserver1 kernel: sd 0:0:3:0: [sdb] Write Protect is off

Apr 7 17:47:37 xenserver1 kernel: sd 0:0:3:0: [sdb] Write cache: enabled, read cache: enabled, supports DPO and FUA

Apr 7 17:47:37 xenserver1 kernel: sdb:

Apr 7 17:47:37 xenserver1 kernel: ldm_validate_partition_table(): Disk read failed.

Apr 7 17:47:37 xenserver1 kernel: unable to read partition table

Apr 7 17:47:37 xenserver1 kernel: sd 0:0:3:0: [sdb] Attached SCSI disk

Apr 7 17:47:39 xenserver1 multipathd: sdb: add path (operator)

Apr 7 17:47:39 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 2 1 round-robin 0 1 1 8:0 100 round-robin 0 1 1

Apr 7 17:47:39 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 17:47:53 xenserver1 kernel: sd 0:0:0:0: alua: port group 00 state A supports touSnA

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:0:0 sda 8:0 [active][ready]

\_ round-robin 0 [prio=1][enabled]

\_ 0:0:3:0 sdb 8:16 [active][ready]

Messages:

Apr 7 17:52:54 xenserver1 multipathd: sda3: remove path (operator)

Apr 7 17:52:54 xenserver1 multipathd: sda3: spurious uevent, path not in pathvec

Apr 7 17:52:54 xenserver1 multipathd: sda2: remove path (operator)

Apr 7 17:52:54 xenserver1 multipathd: sda2: spurious uevent, path not in pathvec

Apr 7 17:52:54 xenserver1 multipathd: sda1: remove path (operator)

Apr 7 17:52:54 xenserver1 multipathd: sda1: spurious uevent, path not in pathvec

Apr 7 17:52:54 xenserver1 kernel: sd 0:0:0:0: [sda] Synchronizing SCSI cache

Apr 7 17:52:54 xenserver1 kernel: sd 0:0:0:0: [sda] Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Apr 7 17:52:54 xenserver1 kernel: mptsas: ioc0: delete expander: num_phys 25, sas_addr (0x5001e671d12252ff)

Apr 7 17:52:55 xenserver1 kernel: mptbase: ioc0: LogInfo(0x31140000): Originator={PL}, Code={IO Executed}, SubCode(0x0000)

Apr 7 17:52:55 xenserver1 kernel: mptscsih: ioc0: ERROR – Received a mf that was already freed

Apr 7 17:52:55 xenserver1 kernel: mptscsih: ioc0: ERROR – req_idx=beaf req_idx_MR=6d mf=ec985e80 mr=ec9817c0 sc=(null)

Apr 7 17:52:55 xenserver1 multipathd: sda: remove path (operator)

Apr 7 17:52:55 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 1 1 round-robin 0 1 1 8:16 100]

Apr 7 17:52:55 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 17:52:56 xenserver1 kernel: sd 0:0:3:0: alua: port group 01 state S supports touSnA

Apr 7 17:52:56 xenserver1 kernel: sd 0:0:3:0: alua: port group 01 switched to state A

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=1][active]

\_ 0:0:3:0 sdb 8:16 [active][ready]

Messages:

Apr 7 18:03:40 xenserver1 kernel: mptsas: ioc0: add expander: num_phys 25, sas_addr (0x5001e671d12252ff)

Apr 7 18:03:41 xenserver1 kernel: mptsas: ioc0: attaching ssp device: fw_channel 0, fw_id 0, phy 11, sas_addr 0x500015500002040a

Apr 7 18:03:41 xenserver1 kernel: target0:0:4: mptsas: ioc0: add device: fw_channel 0, fw_id 0, phy 11, sas_addr 0x500015500002040a

Apr 7 18:03:41 xenserver1 kernel: scsi 0:0:4:0: Direct-Access Intel Multi-Flex 0308 PQ: 0 ANSI: 5

Apr 7 18:03:41 xenserver1 kernel: scsi 0:0:4:0: mptscsih: ioc0: qdepth=64, tagged=1, simple=1, ordered=0, scsi_level=6, cmd_que=1

Apr 7 18:03:41 xenserver1 kernel: scsi 0:0:4:0: alua: supports explicit TPGS

Apr 7 18:03:41 xenserver1 kernel: scsi 0:0:4:0: alua: port group 00 rel port 03

Apr 7 18:03:41 xenserver1 kernel: scsi 0:0:4:0: alua: port group 00 state S supports touSnA

Apr 7 18:03:41 xenserver1 kernel: sd 0:0:4:0: Attached scsi generic sg0 type 0

Apr 7 18:03:41 xenserver1 kernel: sd 0:0:4:0: [sda] 209715200 512-byte logical blocks: (107 GB/100 GiB)

Apr 7 18:03:41 xenserver1 kernel: sd 0:0:4:0: [sda] Write Protect is off

Apr 7 18:03:41 xenserver1 kernel: sd 0:0:4:0: [sda] Write cache: enabled, read cache: enabled, supports DPO and FUA

Apr 7 18:03:41 xenserver1 kernel: sda:

Apr 7 18:03:41 xenserver1 kernel: ldm_validate_partition_table(): Disk read failed.

Apr 7 18:03:41 xenserver1 kernel: unable to read partition table

Apr 7 18:03:41 xenserver1 kernel: sd 0:0:4:0: [sda] Attached SCSI disk

Apr 7 18:03:43 xenserver1 multipathd: sda: add path (operator)

Apr 7 18:03:43 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 2 1 round-robin 0 1 1 8:0 100 round-robin 0 1 1

Apr 7 18:03:43 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 18:03:57 xenserver1 kernel: sd 0:0:4:0: alua: port group 00 state S supports touSnA

Apr 7 18:03:57 xenserver1 kernel: sd 0:0:4:0: alua: port group 00 switched to state A

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:4:0 sda 8:0 [active][ready]

\_ round-robin 0 [prio=1][enabled]

\_ 0:0:3:0 sdb 8:16 [active][ready]

Messages:

Apr 7 18:09:31 xenserver1 kernel: sd 0:0:4:0: [sda] Synchronizing SCSI cache

Apr 7 18:09:31 xenserver1 kernel: sd 0:0:4:0: [sda] Result: hostbyte=DID_NO_CONNECT driverbyte=DRIVER_OK

Apr 7 18:09:31 xenserver1 kernel: mptsas: ioc0: delete expander: num_phys 25, sas_addr (0x5001e671d12252ff)

Apr 7 18:09:31 xenserver1 multipathd: sda: remove path (operator)

Apr 7 18:09:31 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 1 1 round-robin 0 1 1 8:16 100]

Apr 7 18:09:31 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 18:09:37 xenserver1 kernel: sd 0:0:3:0: alua: port group 01 state A supports touSnA

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=1][active]

\_ 0:0:3:0 sdb 8:16 [active][ready]

Messages:

Apr 7 18:17:55 xenserver1 kernel: mptsas: ioc0: add expander: num_phys 25, sas_addr (0x5001e671d12252ff)

Apr 7 18:17:57 xenserver1 kernel: mptsas: ioc0: attaching ssp device: fw_channel 0, fw_id 0, phy 11, sas_addr 0x500015500002040a

Apr 7 18:17:57 xenserver1 kernel: target0:0:5: mptsas: ioc0: add device: fw_channel 0, fw_id 0, phy 11, sas_addr 0x500015500002040a

Apr 7 18:17:57 xenserver1 kernel: scsi 0:0:5:0: Direct-Access Intel Multi-Flex 0308 PQ: 0 ANSI: 5

Apr 7 18:17:57 xenserver1 kernel: scsi 0:0:5:0: mptscsih: ioc0: qdepth=64, tagged=1, simple=1, ordered=0, scsi_level=6, cmd_que=1

Apr 7 18:17:57 xenserver1 kernel: scsi 0:0:5:0: alua: supports explicit TPGS

Apr 7 18:17:57 xenserver1 kernel: scsi 0:0:5:0: alua: port group 00 rel port 03

Apr 7 18:17:57 xenserver1 kernel: scsi 0:0:5:0: alua: port group 00 state S supports touSnA

Apr 7 18:17:57 xenserver1 kernel: sd 0:0:5:0: Attached scsi generic sg0 type 0

Apr 7 18:17:57 xenserver1 kernel: sd 0:0:5:0: [sda] 209715200 512-byte logical blocks: (107 GB/100 GiB)

Apr 7 18:17:57 xenserver1 kernel: sd 0:0:5:0: [sda] Write Protect is off

Apr 7 18:17:57 xenserver1 kernel: sd 0:0:5:0: [sda] Write cache: enabled, read cache: enabled, supports DPO and FUA

Apr 7 18:17:57 xenserver1 kernel: sda:

Apr 7 18:17:57 xenserver1 kernel: ldm_validate_partition_table(): Disk read failed.

Apr 7 18:17:57 xenserver1 kernel: unable to read partition table

Apr 7 18:17:57 xenserver1 kernel: sd 0:0:5:0: [sda] Attached SCSI disk

Apr 7 18:17:58 xenserver1 multipathd: sda: add path (operator)

Apr 7 18:17:58 xenserver1 multipathd: 22202000155fdb615: load table [0 209715200 multipath 1 queue_if_no_path 1 alua 2 1 round-robin 0 1 1 8:0 100 round-robin 0 1 1

Apr 7 18:17:58 xenserver1 multipathd: Path event for 22202000155fdb615, calling mpathcount

Apr 7 18:18:06 xenserver1 kernel: sd 0:0:5:0: alua: port group 00 state S supports touSnA

Apr 7 18:18:06 xenserver1 kernel: sd 0:0:5:0: alua: port group 00 switched to state A

Multipath state:

22202000155fdb615 dm-0 Intel,Multi-Flex

[size=100G][features=1 queue_if_no_path][hwhandler=1 alua][rw]

\_ round-robin 0 [prio=50][active]

\_ 0:0:5:0 sda 8:0 [active][ready]

\_ round-robin 0 [prio=1][enabled]

\_ 0:0:3:0 sdb 8:16 [active][ready]